Online and Over-the-Top (OTT) video viewership is increasing at a tremendous rate. At the same time, the tolerance for poor quality of experience is shrinking to unperceivable levels. This combination of factors is going to drive significant optimization in adaptive streaming technology in the coming months and years.

Over the last several years, we’ve seen traditional UDP style streaming technologies fade away in exchange for http based adaptive streaming formats like MPEG DASH, HLS, HDS, and Smooth. This shift provides a number of benefits. By using short but aligned video segments of varying quality levels , adaptive video is able to leverage the strengths Content Delivery Networks (CDNs) already have in their caching and edge infrastructure to deliver smooth video over the Internet.

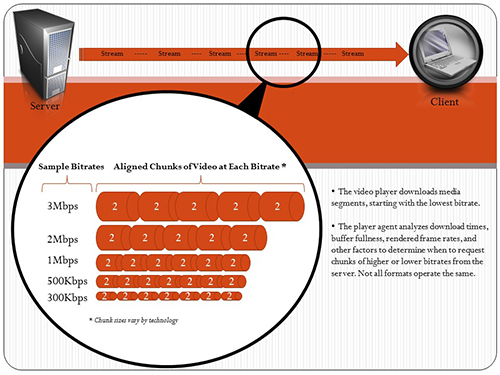

Let’s touch briefly on how this works. In adaptive streaming, performance is regulated through heuristics built into the video  player. The player reads a stream manifest, which tells it a bit about the audio and video, the available quality levels, and where to find the content. In fact, the video in an adaptive stream is technically not really a stream at all! It’s actually comprised of small chunks of downloadable video segments (2 to 10 seconds in length in most cases) available in several different bitrates. The player starts by downloading and playing back the lowest quality segments, and then analyzes how well it is keeping up. If it’s doing great, it starts requesting higher quality levels, taking a moment at each step to assess performance. This is why the videos you watch so often look fuzzy for the first few seconds. This fuzziness is basically your video player starting small until it is satisfied with how well it is performing. It does this until it reaches the highest quality stream it can handle. If the network encounters congestion, it starts going back down the quality stack. Basically, the player is analyzing its own performance throughout the viewing process, and making decisions on which quality level to download based on that analysis.

player. The player reads a stream manifest, which tells it a bit about the audio and video, the available quality levels, and where to find the content. In fact, the video in an adaptive stream is technically not really a stream at all! It’s actually comprised of small chunks of downloadable video segments (2 to 10 seconds in length in most cases) available in several different bitrates. The player starts by downloading and playing back the lowest quality segments, and then analyzes how well it is keeping up. If it’s doing great, it starts requesting higher quality levels, taking a moment at each step to assess performance. This is why the videos you watch so often look fuzzy for the first few seconds. This fuzziness is basically your video player starting small until it is satisfied with how well it is performing. It does this until it reaches the highest quality stream it can handle. If the network encounters congestion, it starts going back down the quality stack. Basically, the player is analyzing its own performance throughout the viewing process, and making decisions on which quality level to download based on that analysis.

This process of negotiating quality based on playback heuristics generally does a good job managing performance, but there are limits to this method’s effectiveness. What happens when the viewers bandwidth, or their system’s ability to playback the content, is not the source of the problems?

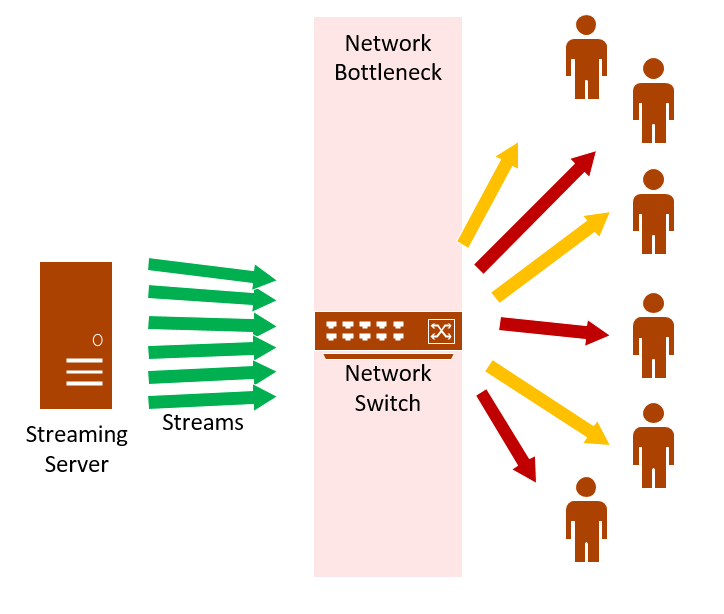

To explore this topic, it might be best to look at scenarios that can negatively affect performance. One that I’m very familiar with from my work in the enterprise is related to network capacity. Many enterprises have closed internal networks architected with just enough headroom to support day-to-day business activities like email, document sharing, conference calls, and so on. Video, on the other hand, is a bandwidth hog that quickly drives a network to congestion (an IT executive I once worked with referred to video as the cholesterol of the network). When networks get congested, playback quality suffers for all. To solve for this problem, a handful of companies have come to the table with Software Defined Networking (SDN) solutions based on peer-to-peer delivery. Hive Technologies, Streamroot, and Kollective are a few of the companies leading with these types of approaches.

In the typical scenario of limited network capacity, performance problems are produced by network bottlenecks: areas of the network where there’s just  too much data trying to traverse at the same time. These bottlenecks are usually the result of too many simultaneous viewers pulling streams from the server or CDN, and often occur during live webcasts where large audiences are all viewing at the same time. Even though all viewers are watching the same program, they must all go back to the source to obtain their own stream segments. Contrarily, in peer-2-peer scenarios, intelligence built into the delivery solution seamlessly sends viewers to other nearby viewers to obtain streams. This limits the number of viewers going all the way back to the source for streams, thereby eliminating the bottlenecks in the network.

too much data trying to traverse at the same time. These bottlenecks are usually the result of too many simultaneous viewers pulling streams from the server or CDN, and often occur during live webcasts where large audiences are all viewing at the same time. Even though all viewers are watching the same program, they must all go back to the source to obtain their own stream segments. Contrarily, in peer-2-peer scenarios, intelligence built into the delivery solution seamlessly sends viewers to other nearby viewers to obtain streams. This limits the number of viewers going all the way back to the source for streams, thereby eliminating the bottlenecks in the network.

But what if the problem isn’t the network, rather, it’s stemming from an issue with a particular CDN or CDN edge server? This is one of the challenges a company called DLVR is aiming to solve with a trademarked approach they call “Responsive Manifests“. By doing real time analysis on many different variables (device, network, location, video characteristics, CDN performance, etc.), the platform creates a unique manifest for each viewer in real time, optimized to provide each individual with the best performance possible. Remember, in traditional adaptive streaming, the player obtains the manifest (the blueprint for the stream), and uses it to determine how to playback the content. In this scenario, the manifest is being continually rewritten in response to delivery performance. Is a CDN edge server having a moment of trouble? No problem, just re-write the manifest to send the player to a different edge server (or a different CDN altogether) until the problems resolve.

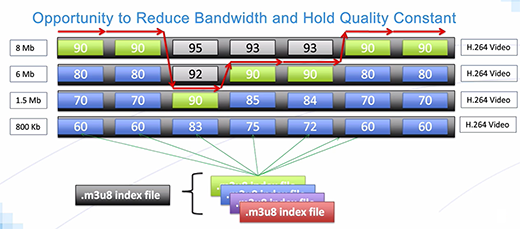

Optimization isn’t only focused on quality of experience. There is plenty of attention centered on efficiency and cost of delivery as well. For example, Telestream recently announced adaptive bit rate optimization capabilities in their Vantage media processing product. It aims to determine where quality improvements actually fail to be perceptible, and then just writes those video segments out of the manifest. For example, if a video is encoded at a top bandwidth of 8 megabits, but a particular scene is very simple and the 8 megabit chunks offer no perceivable benefit over the 1.5 megabit chunks, it will just rewrite the manifest to make the 1.5 megabit chunk the highest available quality for as long as that is the case. This means audiences won’t be downloading high bitrate chunks when the lower bitrate media can do the same job, thereby greatly reducing CDN data costs (click here to see a demo). Netflix, being one of the largest providers of OTT video, also continually pushes the boundaries in optimizing adaptive video delivery. From encoding optimization, to quality analysis, to using predictive analytics to detect problems, Netflix is amazingly transparent in their efforts and their tech blog should be on every streaming enthusiasts reading list.

announced adaptive bit rate optimization capabilities in their Vantage media processing product. It aims to determine where quality improvements actually fail to be perceptible, and then just writes those video segments out of the manifest. For example, if a video is encoded at a top bandwidth of 8 megabits, but a particular scene is very simple and the 8 megabit chunks offer no perceivable benefit over the 1.5 megabit chunks, it will just rewrite the manifest to make the 1.5 megabit chunk the highest available quality for as long as that is the case. This means audiences won’t be downloading high bitrate chunks when the lower bitrate media can do the same job, thereby greatly reducing CDN data costs (click here to see a demo). Netflix, being one of the largest providers of OTT video, also continually pushes the boundaries in optimizing adaptive video delivery. From encoding optimization, to quality analysis, to using predictive analytics to detect problems, Netflix is amazingly transparent in their efforts and their tech blog should be on every streaming enthusiasts reading list.

In this post, I’ve aimed to provide just a few examples of solutions already in market focused on adaptive streaming optimization. Clearly, for every solution that has made it to market, there are countless more working their way through labs. This is not surprising because, as amazing as the technology is, there is still plenty of room for improvement. Search for “Adaptive Streaming Optimization’ and you’ll find no shortage of research papers exploring everything from improving adaptive streaming performance over wireless networks to improving the efficiency of player heuristics. It all points to the fact that the foundation of adaptive streaming is solid and here to stay, but the era of adaptive streaming optimization has only just begun.